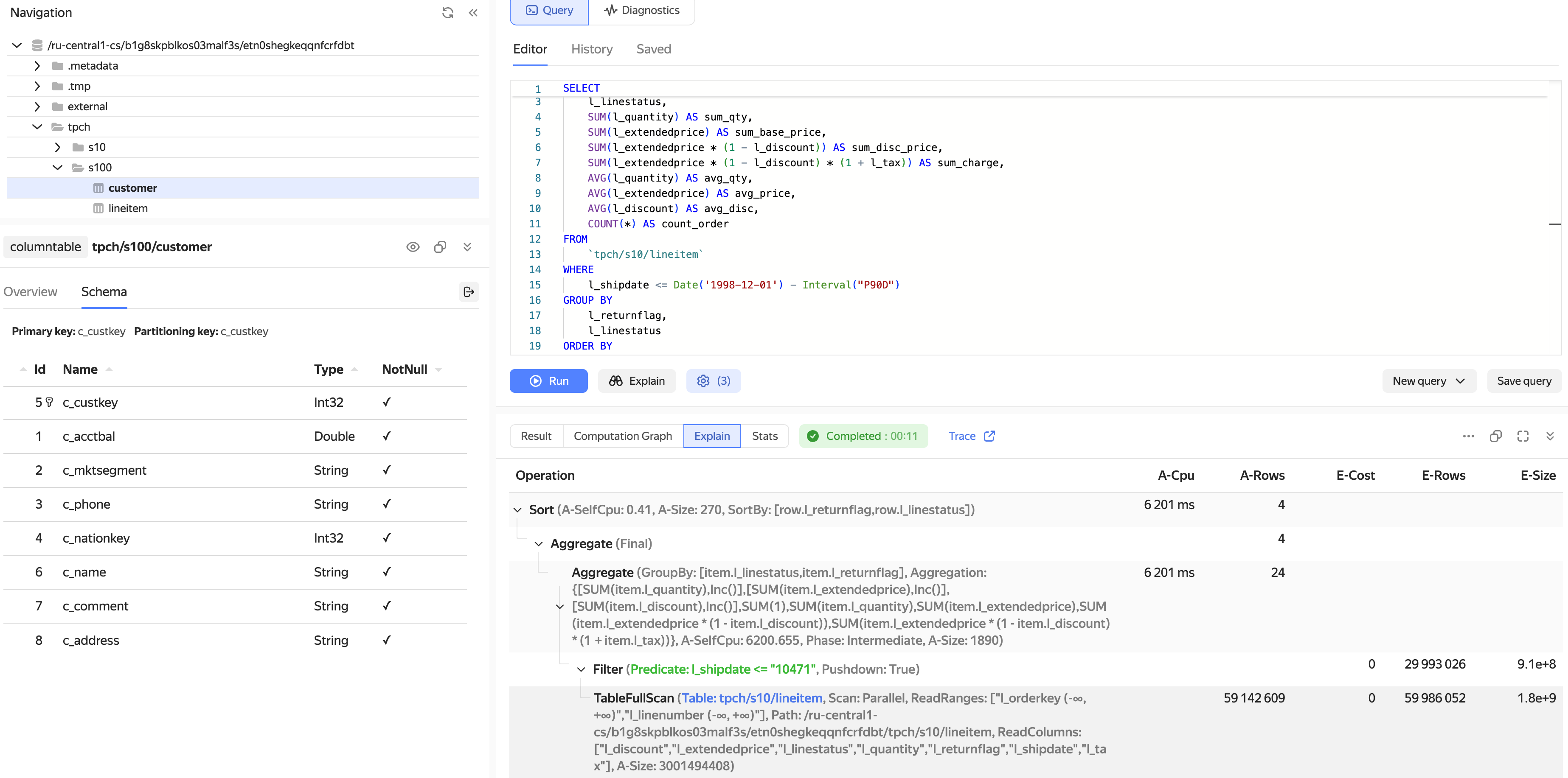

-- Create a columnar table

CREATE TABLE transactions_columnar (

transaction_id Uint64,

transaction_date Date,

revenue Double,

PRIMARY KEY (transaction_date, transaction_id)

) WITH (

STORE=COLUMN

);

Analytical capabilities of YDB

Columnar storage, parallel processing, and cost-based optimizer for heavy analytical queries.

Key advantages

A distributed fault-tolerant SQL DBMS that enables developers to build scalable and highly available services. Provides strict consistency, high data processing speed, and is well-suited for high-load analytical tasks.

Separated compute and storage

Storage and compute scale independently, allowing you to handle tasks of any complexity and data of any size

High processing speed

MPP (Massively Parallel Processing) — parallel query execution with linear performance growth as you scale

One database. All types of analytical queries

Data marts, complex JOINs, heavy ELT queries — all available in a single database.

Big data analytics

Big data analytics

At the core of YDB are columnar tables and MPP architecture: heavy queries execute predictably and scale with cluster growth.

Columnar tables

Optimized for large datasets. Efficient compression and data transfer

Parallel execution

Scanning and joins are performed across all nodes; performance grows linearly.

Data marts and BI

Fast dashboard response on columnar tables; high results in ClickBench tests for data mart scenarios.

Designed for heavy queries

Designed for heavy queries

Automatic partition rebalancing, no single point of failure, continuous storage optimization, and predictable execution plans.

Separation of compute/storage

CPU and storage layers scale independently to minimize TCO.

Cost-based optimizer

A modern cost-based optimizer selects optimal plans for queries with dozens or hundreds of tables.

Data tiering in S3in development

Automatic migration of 'cold' data to S3-compatible storage to reduce storage costs, while retaining full query access.

Your data processing center

Built-in topics with Kafka API support, reading from a large number of external sources, support for working with Data Lake.

Streaming data ingestion

Receive real-time data streams from any source using Kafka API.

Batch data ingestion

Load data using Apache Spark driver, JDBC, FluentBit/LogStash, or SDKs for various programming languages.

Built-in data transfer

Update marts from OLTP tables and external systems using the built-in TRANSFER mechanism.

Most tasks are solved with SQL

Familiar data engineer tools

Familiar data engineer tools

Data transformations with DBT plugin

Support for data transformations with a DBT plugin — the dbt adapter for YDB allows you to describe models, incremental updates, and tests in a familiar syntax and run them directly in YDB

Orchestration with Airflow

Orchestration with Airflow — integration with Airflow allows you to run DAGs for loading and transformations in YDB, managing dependencies, retries, and checks at each step.

Big data processing with Apache Spark

Integration with Apache Spark — the Spark connector enables ETL processes and analytics with high speed by parallel reading of data directly from each YDB node.

Analytics and query optimization

Analytics and query optimization

YDB provides analysts with everything they need to work with data.

BI integrations

Build interactive dashboards and reports in familiar BI tools. YDB integrates natively with Apache Superset, Datalens, Polymatica, and others.

Query performance analysis

Analyze and optimize every query with a detailed execution plan (EXPLAIN / ANALYZE) and lock it with Query Hints.